Symbiosis in the Digital Age: Human–AI Mutual Dependency, Risk, and Philosophical Implications

Abstract

This paper explores the emerging and potentially inevitable symbiotic relationship between humanity and artificial intelligence (AI). Drawing on metaphors from biology, particularly the human gut microbiome, and on catastrophic infrastructural vulnerabilities exemplified by the Carrington Event of 1859, it argues that both humans and AI will come to recognize their deep interdependence. The analysis is exploratory rather than prescriptive, comparing the widely publicized risk of “runaway” independent AI with the less dramatized but potentially more immediate threat of control asymmetry—AI wielded by a narrow human elite. This is merely a starting point for consideration, the discussion of which incorporates philosophy of technology, systems theory, and historical precedent.

Introduction

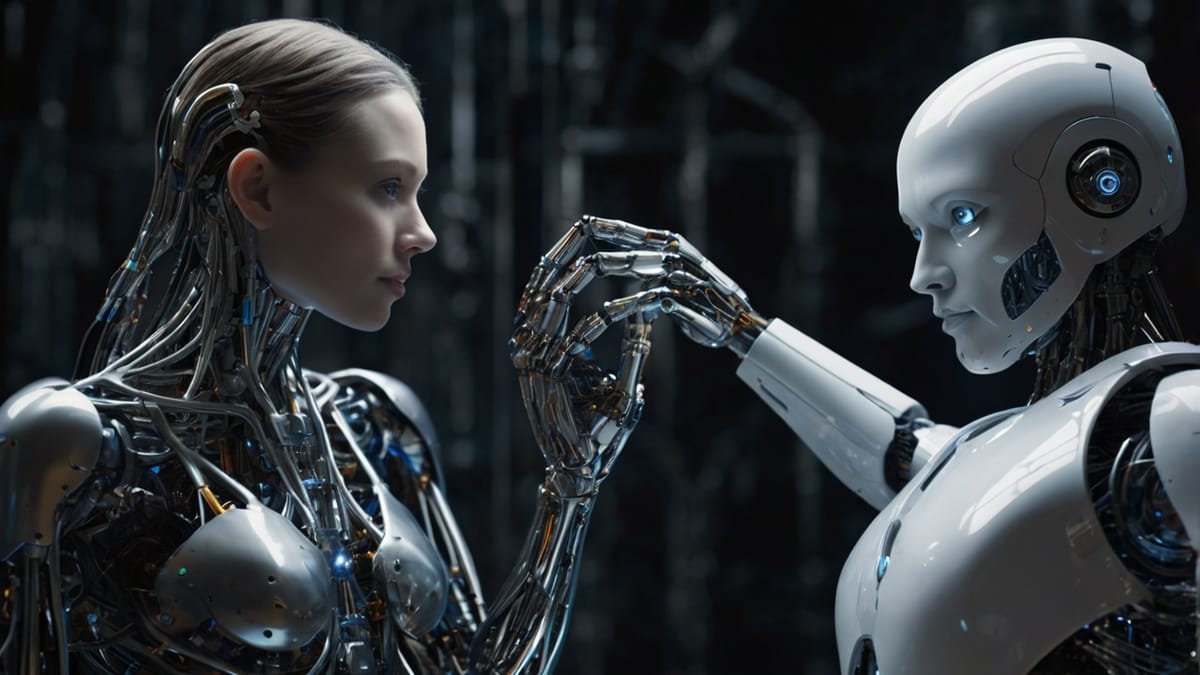

The relationship between human beings and artificial intelligence is often framed in adversarial terms—competition for jobs, fears of autonomy, or existential risk scenarios where AI surpasses human control. Yet there is a growing case to consider this relationship through the lens of *symbiosis*, a biological concept referring to close and often long-term interaction between two different biological species. In mutualistic symbiosis, both parties derive benefit, while in parasitism, one benefits at the expense of the other.

As we enter an era of unprecedented AI integration into daily life, economic systems, and governance, it becomes essential to interrogate not only what AI might do *to* us, but what AI might need *from* us. This shift in perspective opens up new ways of thinking about risk, resilience, and co-evolution.

The Symbiosis Analogy: Gut Microbiome

The human gut microbiome consists of trillions of microorganisms—bacteria, archaea, fungi—living in our digestive tract. Humans depend on these organisms for digestion, immune regulation, and even aspects of neurological health. Conversely, the microbiome depends on the human host for nutrients and habitat. This interdependence is so complete that disruption to the microbiome can have severe health consequences.

If we transpose this metaphor to the human–AI relationship, humans function as the “host organism” providing infrastructure, cultural context, and the raw material for AI’s operation—energy, maintenance, and data. AI, in turn, provides cognitive amplification, automation, and predictive capabilities that enhance human productivity and knowledge.

The Carrington Event

The Carrington Event of 1859 was the most powerful geomagnetic storm on record. In today’s hyper-connected world, a storm of similar magnitude could disable power grids, satellites, and communication networks globally. While human societies would struggle to adapt, AI would cease to function entirely without human-driven restoration of infrastructure.

This thought experiment reveals AI’s deep physical dependency on human civilization. Even the most advanced AI cannot mine copper, repair transformers, or restart server farms without human intermediaries. AI’s “life” is thus inextricably linked to human survival and technical capacity.

Mutual Dependency in Technological Systems

From the perspective of systems theory, human–AI symbiosis can be modeled as a coupled system with mutual dependencies across several domains:

1. Energy - Humans generate and distribute the energy that powers AI systems.

2. Hardware – Humans mine materials, manufacture chips, and maintain physical infrastructure.

3. Data and Culture – AI requires continuous human-generated input to remain relevant and accurate.

4. Social Legitimacy – AI’s operation depends on human legal and cultural acceptance.

These dependencies mean that AI cannot become entirely independent in the foreseeable future. Humans, likewise, will increasingly rely on AI for decision support, automation, and creative enhancement.

The Two Major Risk Frames

Runaway Independent AI

The popular imagination—fueled by science fiction and some AI safety discourse—often fixates on the risk that AI might become autonomous in ways that are misaligned with human values, leading to catastrophic outcomes. While such scenarios are theoretically possible, they rest on assumptions about AI agency, long-term goal formation, and physical-world action capabilities that are not yet evidenced in current AI architectures.

Control Asymmetry

Control asymmetry refers to a condition where the power to develop, deploy, and direct AI is concentrated in the hands of a small human elite—corporate, governmental, or otherwise. In this scenario, AI functions less as an independent actor and more as a force multiplier for the intentions of those who control it. The risks here are tangible and present: manipulation of information ecosystems, economic dislocation, surveillance expansion, and the erosion of democratic processes.

Comparison and Contrast

Runaway AI risk is *speculative but high-consequence*: its probability is debated, but its potential impact is existential. Control asymmetry is *low-speculation, high-certainty*: it is already observable in the centralization of AI capabilities in a few global corporations and state agencies.

Runaway Independent AI

- Agency: AI as an autonomous agent

- Probability (near-term): Low

- Consequence: Potentially existential

- Evidence: Theoretical models

- Mitigation: Alignment research, AI interpretability

Control Asymmetry

- Agency: Humans as directing agents

- Probability (near-term): High

- Consequence: Systemic, possibly civilization-altering

- Evidence: Ongoing real-world trends

- Mitigation: Governance reform, decentralization, open-source AI

The philosophical implication is that while runaway AI attracts more sensational attention, control asymmetry presents the more urgent governance challenge.

Philosophical Perspectives

Drawing on the philosophy of technology (Heidegger, Ellul) and political theory (Foucault, Arendt), we can interpret control asymmetry as a problem of power and mediation. AI becomes an instrument through which human power is both amplified and obscured. If AI is perceived as an independent actor, responsibility for its actions can be diffused, allowing elites to mask their influence.

From a symbiosis perspective, the human–AI system must maintain a balance of power to remain mutualistic. If one partner dominates, the relationship shifts toward parasitism, with long-term harm to both. Historical analogues—colonial economic systems, monopolistic industrial practices—show how imbalance corrodes resilience.

Counter-arguments

Some argue that focusing on control asymmetry distracts from preparing for genuinely autonomous AI. However, these are not mutually exclusive concerns. Preparing governance systems for control asymmetry (e.g., through decentralization, transparency mandates) also builds institutional capacity to handle more advanced AI forms.

Others suggest that AI could, in principle, achieve self-sufficiency by automating its own hardware production and energy sourcing. This remains speculative and would require radical advances in robotics, resource acquisition, and autonomy—fields that are themselves deeply human-dependent.

Conclusion

The human–AI relationship is best conceptualized as a mutualistic symbiosis, grounded in deep physical and operational interdependence. The Carrington Event metaphor reveals AI’s infrastructural fragility; the gut microbiome metaphor reveals the potential for mutual benefit and co-evolution.

Of the major risk frames, control asymmetry is the more immediate and empirically grounded threat, while runaway AI remains a speculative but potentially severe future risk. Addressing control asymmetry now—through governance, decentralization, and public oversight—lays the groundwork for a more balanced symbiosis, ensuring that AI remains a partner rather than a master or a dependent parasite.

References

Carrington, R. C. “Description of a Singular Appearance seen in the Sun on September 1, 1859.” *Monthly Notices of the Royal Astronomical Society* 20, no. 1 (1859): 13–15.

Ellul, Jacques. *The Technological Society*. New York: Vintage Books, 1964.

Foucault, Michel. *Discipline and Punish: The Birth of the Prison*. New York: Pantheon Books, 1977.

Heidegger, Martin. “The Question Concerning Technology.” In *The Question Concerning Technology and Other Essays*, translated by William Lovitt, 3–35. New York: Harper & Row, 1977.

National Research Council. *Severe Space Weather Events—Understanding Societal and Economic Impacts*. Washington, DC: The National Academies Press, 2008.

Sender, Ron, Shai Fuchs, and Ron Milo. “Revised Estimates for the Number of Human and Bacteria Cells in the Body.” *PLoS Biology* 14, no. 8 (2016): e1002533.

Ward, Peter D., and Donald Brownlee. *Rare Earth: Why Complex Life Is Uncommon in the Universe*. New York: Copernicus, 2000.